Beginner's Guide to Generative AI

Especially Neural Networks (The Tech Behind Gen AI)--Part 2 of AI Series

Imagine waking up one day to find that a machine has designed the next viral fashion trend without human input. Sounds futuristic? Well, that future is already here.

With the rise of ChatGPT, Gemini, and now Deepseek, Generative AI is reshaping creativity.

It’s the kind of technology that Google’s CEO once claimed is 'more profound than fire or electricity.' A bold statement? Maybe. But considering what Generative AI can do, he might not be wrong.

Unlike traditional AI, which analyzes data and predicts patterns (like Netflix suggesting your next binge-watch), Generative AI actually creates something new.

That’s a game-changer!

Types of Generative AI

Large Language Model (LLM) frameworks like GPT that generate new text comprise only one category of Generative AI.

Other categories include Image Generators such as DALL.E, Video Generators like Runway Gen-2, and Code Generators similar to GitHub Copilot.

Gen AI is built on top of an architecture known as neural networks, which is a significant part of today’s discussion.

That’s because whether you are a hobbyist, tech enthusiast, or non-techie fascinated by AI, mastering neural networks or, at the very least, understanding the concept is essential to building functional, profitable and useful solutions with Gen AI.

AI in Africa Must Be Functional, Profitable & Useful

Today, I wanted to share this video that’s been stuck in my mind for weeks.

AI in Africa

Neural Networks 101

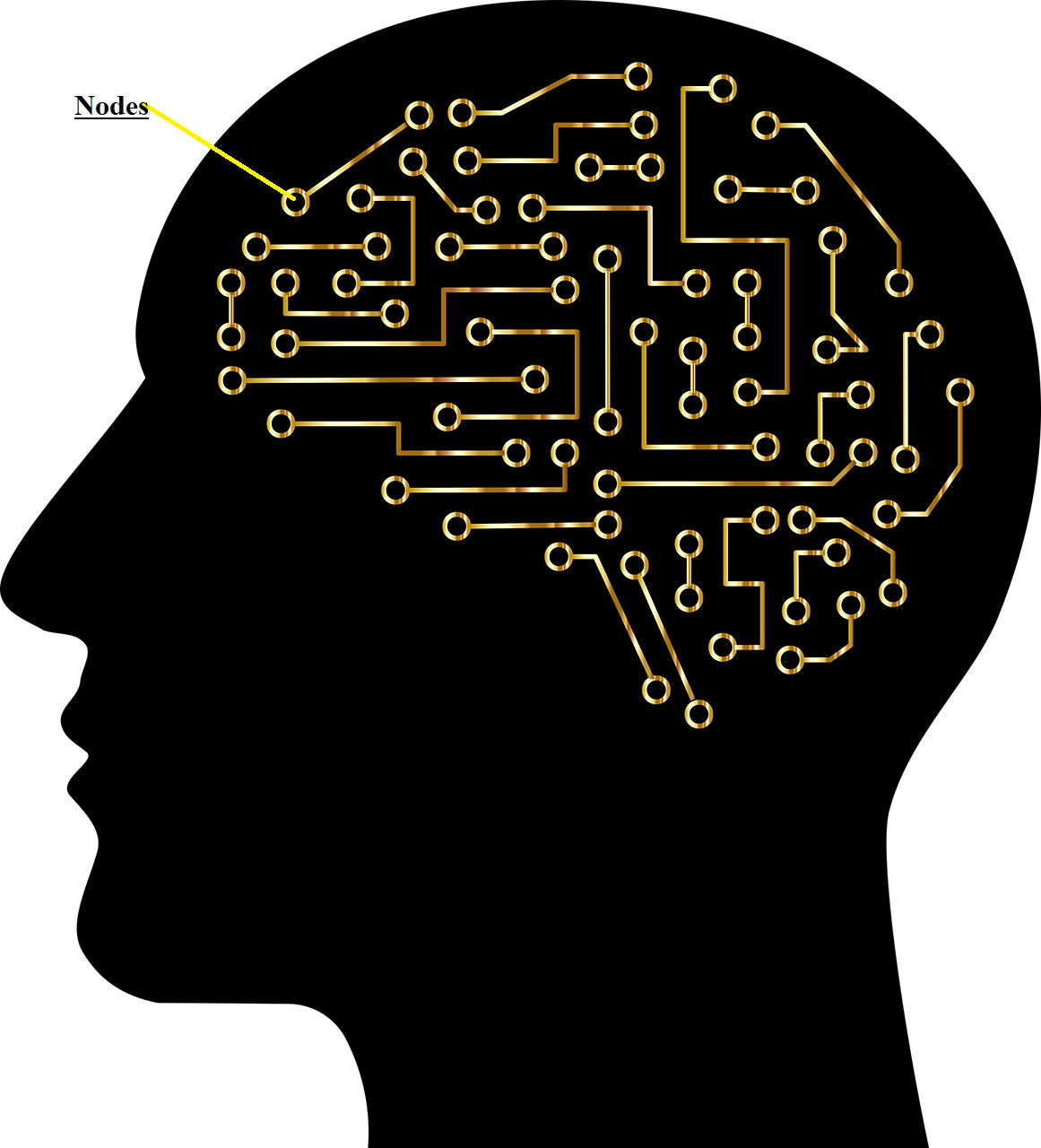

Neural networks are artificial networks that mirror how the human brain works. A human brain comprises billions of neurons—cells that send and receive signals.

As humans acquire new information, neurons form new connections, adding up to quadrillions of connections.

A neural network is made up of artificial neurons that, instead of cells, consist of software modules called nodes.

The core function of these neurons is to take in information, work on it and then pass it along—but that doesn’t entirely paint a picture. Let’s use an analogy instead.

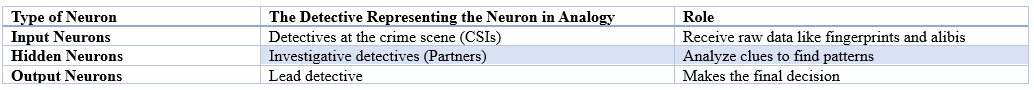

Imagine a team of seven homicide detectives in a police station. Each detective on the team has a speciality, but they are divided into three larger groups—four crime scene investigators, two detectives, and one lead detective.

When a new case comes in, the detectives work together to solve the case.

The four CSIs examine the scene, gather clues and analyze them in labs.

The two partners sort through the clues to find patterns.

In consultation with all the detectives, the lead detective gives the directive to arrest the culprit.

At first (when the team is first constituted), the detectives might make mistakes, leading to a low closure rate. Over time, the detectives learn from past cases and get better at solving mysteries (in a neural network, this is called training).

The detectives represent neurons in a neural network.

For example, in an image recognition neural network:

The input neurons receive raw pixels (edges, colors), examine and identify them, and then pass them along.

The hidden neurons investigate and detect patterns (shapes, colors).

Output neurons make the final decision (e.g., this is a cat!).

But how did the output neuron know it was a cat?

How a Neural Network Learns (That The Image is a Cat) and Improves Over Time

Step 1: Making a Guess (Forward Pass)

At first, the detectives (neurons) know nothing. They take wild guesses based on clues. For example, an image recognition neural network might guess, “This is a dog!” When it is a cat.

Step 2: Checking the Mistake (Loss Function)

The chief inspector (loss function) checks the answers and calculates the level of wrongness (math in action).

If the network guessed ‘dog’ instead of ‘cat,’ it gets a high penalty (big error).

The penalty is smaller if it guessed ‘tiger,’ which is closer to a cat.

If it guessed ‘cat,’ the penalty is zero (perfect).

Step 3: Fixing Mistakes (Back Propagation & Weight Updates)

The chief inspector sends feedback to the network.

a) Discover the neurons that made the wrong call.

b) Adjust their ‘importance’ weight to fix the mistakes.

For example, if a neuron over-focused on ‘dog-like ears,’ the network automatically reduces its weight.

If a neuron noted ‘whiskers’ but was not confident, the network increases its weight as a reward.

Weight is the score assigned to a neuron based on correctness in analyzing a given piece of data.

Considering the detective analogy, a fingerprint in a crime scene is more important than a discarded candy wrapper. With experience, detectives learn which clues matter more.

Because neural networks are not human, they do not learn intuitively like the detectives. Neurons that make fewer mistakes are rewarded with higher weights until they become experts.

Neurons that make more mistakes are punished with low weights till they pivot and focus on something else.

c) The adjustments move backwards through the network—from the output layer to the hidden layers and then to the input layer. This process is called backpropagation (backward propagation of errors).

Step 4: Repeat Until The Network Gets Smart

The network keeps making guesses, checking mistakes, and adjusting weights millions of times until it gets good at recognizing patterns. Overtime:

The neurons that detect important features (whiskers, cat eyes) get stronger.

The neurons focusing on irrelevant details (random background colors) become weaker.

The network eventually becomes so accurate that it can recognize cats almost perfectly!

Okay, that’s great, but it still does not answer the question. How did the chief inspector, who one would assume is the output neuron, know it was a cat?

They are a magician well versed in the arts of Legilimency (magically accessing a person’s erm…machine’s brain to access thoughts, memories, and emotions to learn stuff.

Kidding! That only happens in Harry Potter. The chief inspector in neural networks is a mathematical function, and they know stuff because of labeled data.

The Chief Inspector Relies on Ground Truth (Correct Labels)

The ground truth (correct labels) comes from humans manually labeling the training data. The loss function calculates how far off the network's guess is from the real answer. Backpropagation corrects mistakes and refines the network over time.

The chief inspector doesn’t think on its own. It just compares guesses to human-provided correct answers in the training data. The better the training data, the smarter the network becomes!

What If There’s No Labeled Data? (Unsupervised Learning)

Sometimes, labeled data does not exist. In these cases, neural networks find patterns on their own.

For example:

Instead of labeling images as "cat" or "dog," a neural network might group similar images without knowing what they are.

This is how AI discovers clusters in data, like customer segments or genetic similarities.

What If the Chief Inspector is Wrong?

If the training data has mistakes or biases, the network will learn those too! This is why AI fairness and ethics are so important. It is imperative to ensure the "chief inspector" has correct information.

Still, even with the bias weakness, it's pretty genius. No?

Next up, training at scale. How did the behemoth that is ChatGPT happen?

Training at Scale: Imagine a Massive Detective Academy

So far, we've looked at a small team of detectives solving cases one by one. Real-world AI training is different.

It is similar to an entire academy of detectives (thousands of networks) solving cases together.

Here is how it works.

Step 1: Massive Datasets (Big Data)

Instead of training on a few crime scenes, the AI trains on millions of examples e.g. billions of words for large language models.

The more examples, the better the detectives (neurons) get at spotting patterns.

AI models like GPT are trained on massive datasets scraped from books, websites, and conversations.

That is why GPT is excellent at conversations; it learned conversation patterns through training.

You know how, in Mathematics, if you have the formula for the area of a triangle (½ of base x height), you can calculate the area of any triangle no matter how absurd the values?

Similarly, GPT learned the conversation formula through training. Now, no matter how absurd the question you throw at it, it simply applies the formula and gives you an answer🤯

Step 2: High Powered Computers (GPUs and TPUs)

Training a neural network requires massive computational power.

Instead of one detective writing notes by hand, imagine thousands of detectives using supercomputers to process clues at lightning speed.

This is where GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) come in.

GPUs are designed for parallel processing, meaning they can handle millions of calculations at the same time.

TPUs (used by Google) are even faster and explicitly designed for AI workloads.

Makes Sense, but I am Not Tech-Savvy. What Exactly is a GPU?

Visualize a GPU as a team of assistants working together to process book requests in a library.

Instead of handling one request at a time, they can fetch, sort, and deliver many books simultaneously.

This makes GPUs great for tasks that require lots of small calculations at the same time—like rendering images, gaming, and even AI.

In contrast, the trusty CPU in your laptop is like one librarian. They are perfect at handling one request for a book per time, but if you give them more requests, they slow down and crash.

Step 3: Parallel Training: Detectives Solving Cases Together

In AI training, multiple computers train the model in parallel, meaning they process huge amounts of data at once (like having trillions of detectives working together).

OpenAI trains models like GPT using thousands of interconnected GPUs across multiple servers.

For example, GPT-4 is trained on trillions of words, using thousands of GPUs running for months. That’s why it can generate human-like text. It has seen more data than any human ever will!

Step 4: Iterating Over Millions of Cases (Epochs)

One training round is called an epoch—where the AI sees all the training data once.

But one pass isn’t enough! AI trains over and over on the data until it reaches high accuracy.

Think of it like detectives reviewing past cases to refine their skills. Luckily, tools exist to make the process seamless.

Frameworks That Make AI Training Easier

Instead of coding everything from scratch, AI engineers use frameworks like:

TensorFlow (by Google)

PyTorch (by Meta/Facebook)

JAX (for even faster AI computations)

An AI framework provides pre-built functions for tasks like recognizing images, understanding speech, or making predictions, so you don’t have to write all the code yourself.

Conclusion

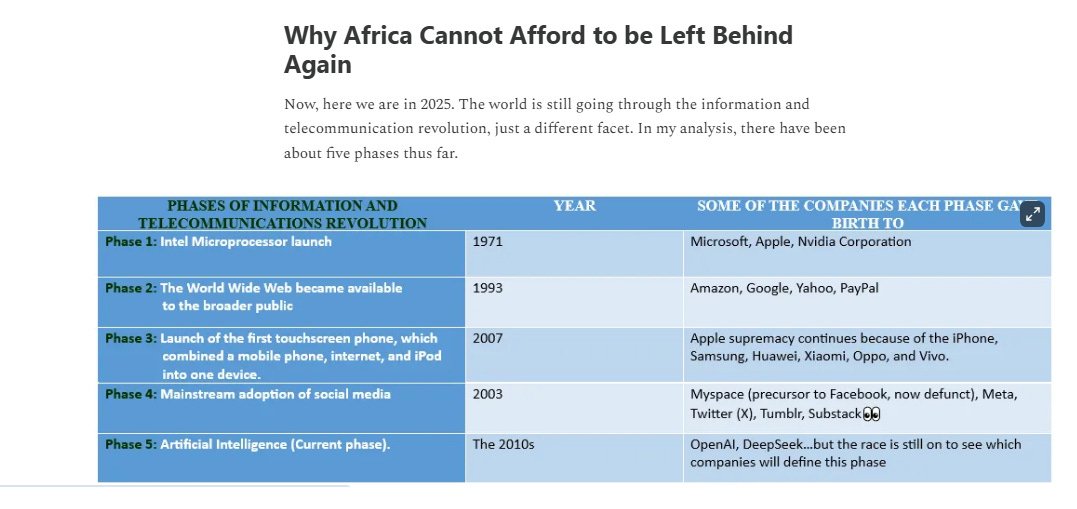

Having understood neural networks and how they gave birth to generative AI, the next step is to figure out how Africa can best leverage AI. That’s the topic of Part 3 of the AI series. However, here is a bit of a preview.

GPUs, for example, Nvidia’s RTX 4090 retails between $1600 and $2300. To acquire the thousands or hundreds of thousands needed to train a model would cost a pretty penny.

Okay, let’s assume some African countries can overcome the cost challenge. Next up is data. The continent has the worst case of data gaps. We could list the statistics, but nothing illustrates this better than a real-life example.

Dr. Sheila Ochugboju, the Alliance for Science executive director, once served in an advisory capacity in one African country.

“The data gaps, especially in agriculture,” she said, “were so stunning that one would even wonder what was the basis for decision-making. For instance, we didn’t have the figures for how much maize was being produced or even what challenges the farmers were facing. Most of the work we did was based on guestimates! We figured we needed systems to capture the data because we couldn’t work out solutions without the data.”

These two existing challenges reveal how difficult it will be for Africa to go to toe with the rest of the world on AI research.

Yet, as we argued in part 1 of the series, Africa cannot afford to get left behind. So what should the continent do?

See you in Part 3 for an answer.

Thank you for sticking this far. You’ve been reading Africa: Not an Afterthought. A publication that leads the conversation on how Africa can leverage technology, trade (particularly the AfCFTA), regional integration and Pan-Africanism to build a continent that is no longer an afterthought.

Today, it was all about technology! If you enjoyed this or found it useful, please